I want to improve ImpEx performance on a multi-core system. What should I do?

The performance of ImpEx can be improved by increasing the number of threads used by the index engine. You should find the optimal number of Worker Threads for the backend server dedicated to importing data. You can start with a value between 1 and 1.5 times the number of cores, for example for 6 cores, try 6-9 worker threads. The following displays the local.properties values:

1 | =6 |

You have got a Large, One-hit ImpEx file to import new data. What command do you prefer to use - INSERT or INSERT_UPDATE?

When you execute an ImpEx with the INSERT_UPDATE header then hybris always does a SELECT query before inserting data. A SELECT query retrieves an existing item using the unique attributes specified in the INSERT_UPDATE header.

Since you are importing new data and you know that there is no existing data you can use the INSERT header to reduce the number of queries to the database.

Why avoiding Mixing Headers and Multiple Passes is a good practice?

ImpEx works best if you separate the data by header.

If you can perform ImpEx in one pass, you win in performance, especially for large ImpExes.

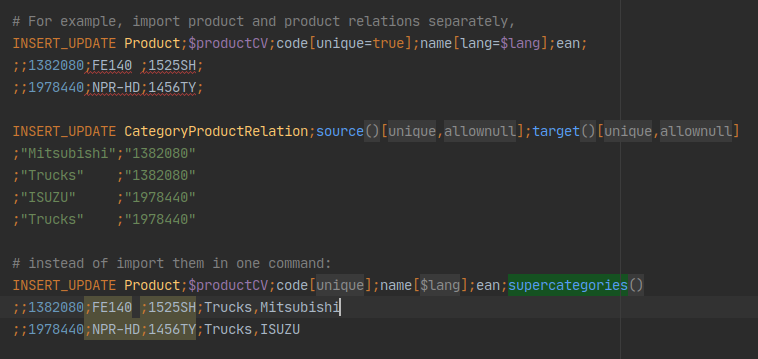

You have to import items with many-to-many relations. How will you do this?

Many-to-many relations are stored in their own database table. So, if you are trying to perform in one step some large import with few many-to-many relations, you will get a significant performance issue. Instead, you have to import many-to-many relations separately, step by step, to avoid problems with performance.

What else can I do to improve performance for large ImpEx?

Some service layer interceptors can be skipped safely to boost performance for large ImpEx files. It has a significant effect especially if custom interceptors have complex logic. To avoid unnecessary delays you can write a bypass interceptor that skips the original login when there is a special flag set in the session. The following shows an example code of bypassing interceptors:

1 | public class FastImportInterceptor implements RemoveInterceptor, PrepareInterceptor { |

We’ve got a large ImpEx to import in the working server where some cronjobs are running. How can we improve performance when importing data?

We have to disable other cronjobs when importing data. Because other cronjobs may struggle with ImpExImportCronjob for limited resources when importing data.

Created based on official documentation